Uppy 3.3 to 3.13: conditional S3 multipart, signing on the client, speedy handling of 10k files and much much more

This is a big Uppy update post, covering releases from

3.3.0 to 3.13.0!

In this issue: the long-awaited unified S3 plugin which can switch between

regular and multipart uploads, improved performance when adding and validating

10k+ files, and stability improvements and bug fixes. The Transloadit plugin is

now also easier to configure and leaner in bundle size, since we removed

socket.io-client in favor of

Server-Sent Events.

Please make a cup of something tasty ☕️ (in a non-spillable container), as this will be quite a ride.

AWS S3

Merging of the plugins

After years of separating regular non-multipart uploads and multipart uploads,

we are finally merging the two sibling plugins: @uppy/aws-s3 and

@uppy/aws-s3-multpart. Here’s the brief version of what you need to know, with

details to follow in the next section:

@uppy/aws-s3-multipartis deprecated. You should use@uppy/aws-s3and set theshouldUseMultipart: trueoption to get the same “multipart always enabled” behavior. We plan to keep@uppy/aws-s3-multipartaround as an alias for the next major cycle and add a warning at the install when Uppy 4.0.0 will be released.@uppy/aws-s3now hosts two plugins:- the legacy one, untouched, to guarantee backward compatibility. We plan to get rid of it in the next major, we recommend moving away from it and report if you see something missing in the new plugin.

- the new merged plugin, available if you pass

shouldUseMultipartoption.

Our plan for this merge is to maintain the backward compatibility until the next major, while also providing forward compatibility so you can try our new plugin and give us some feedback before we remove the old code for good.

Conditional Multipart Explained

Multipart and “regular” uploads have different use cases. The advantages of multipart uploads are:

- Improved throughput – You can upload parts in parallel to improve throughput.

- Quick recovery from any network issues – Smaller part size minimizes the impact of restarting a failed upload due to a network error.

- Pause and resume object uploads – You can upload object parts over time. After you initiate a multipart upload, there is no expiry; you must explicitly complete or stop the multipart upload.

- Begin an upload before you know the final object size – You can upload an object as you are creating it.

However, the downside is request overhead, as it needs to do creation, signing, and completion requests besides the upload requests. For example, if you are uploading files that are only a couple kilobytes with a 100ms roundtrip latency, you are spending 400ms on overhead and only a few milliseconds on uploading. This really adds up if you upload a lot of small files.

So now you can use just one plugin, the @uppy/aws-s3, and enable multipart

conditionally, even per-file:

uppy.use(AwsS3, {

shouldUseMultipart(file) {

// Use multipart only for files larger than 100MiB.

return file.size > 100 * 2 ** 20;

},

});

or

uppy.use(AwsS3, {

shouldUseMultipart: true,

});

Please see the new

shouldUseMultipart: boolean | function

option for details.

Signing on the client

By default, when you upload to S3 with Uppy, every file, or every chunk in case of Multipart, needs to be signed on the server. For many small files or files with many chunks this means a few additional requests per file/chunk of the upload.

To address this issue and speed up the uploads by roughly 20%, we are

introducing a new option: getTemporarySecurityCredentials: boolean | function.

When true, both S3 and S3 Multipart uploads will be signed on the client using

the

AWS Security Federation Token,

created once per user (until it expires) on your backend rather than a unique

signed URL for every single chunk.

You should opt-in into this only if you are comfortable giving end users direct access to signing files for your bucket.

Stability and bug fixes

- We’ve improved the internal handling of

RateLimitedQueueto make retries more robust and increased priority ofabortandcompleterequests. - While we were at it, we’ve also fixed resuming in single-chunk uploads, and improved chunk size calculation.

- Golden Retriever integration with S3 Multipart was fixed.

Additionally, we’ve disabled pause-resume buttons in the UI for remote S3 Multipart uploads — they are not supported on Companion, so the UI now reflects that.

Core

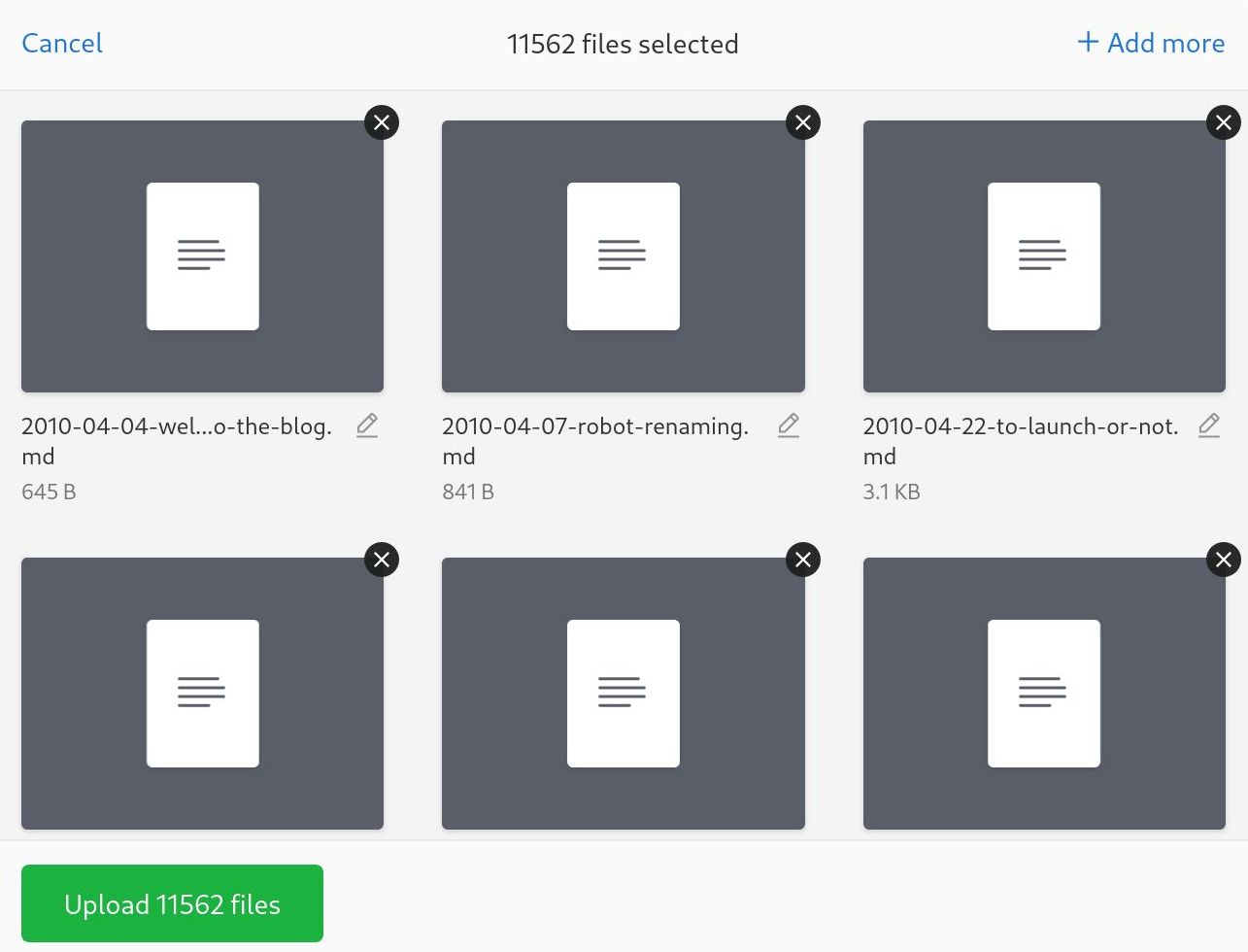

Performance of adding and uploading big batches of files has (yet again) been greatly improved with refactors in both Uppy Core and Provider Views (that’s what we internally call all remote UIs: Instagram, Unsplash, Dropbox).

We’ve been able to achieve this by refactoring out totals validation for Restrictions (maxTotalFileSize, maxNumberOfFiles): instead of doing it for each file, we perform the check at the end, after all the files have been added/validated. This was confirmed to work with 10k+ files! 🚀

We’ve also fixed an issue with delayed throttled progress events, which could lead to incorrect progress.

Transloadit

assemblyOptions

We’ve introduced a new option called

assemblyOptions: object | function,

which replaces the getAssemblyOptions, params and fields (those are now

deprecated and will be removed in the next major).

Here’s how you can use the new option as an object:

uppy.use(Transloadit, {

assemblyOptions: {

params: {

auth: { key: 'key-from-transloadit' },

template_id: 'id-from-transloadit',

steps: {

// Overruling Template at runtime

},

},

signature: 'generated-signature',

fields: {

// Dynamic or static fields to send along

},

},

});

And here’s an example with a function, to be able to set meta fields per-file, for instance:

uppy.use(Transloadit, {

assemblyOptions(file) {

return {

params: {

auth: { key: 'TRANSLOADIT_AUTH_KEY_HERE' },

template_id: 'xyz',

},

fields: {

caption: file.meta.caption,

},

};

},

});

Server-sent events

Historically Transloadit supported progress updates via Socket.io. It is a robust and stable package, but came with a “price-tag” in the form of bundle size: 38.3 kB Minified. So it’s been on our minds for a while to replace it with something more lightweight, without breaking backwards-compatibility for the older clients (so simply removing Socket.io on the server in favor of plain WebSockets is not desireable).

The answer —

Server-sent events.

It’s a simple low-overhead one-way connection from Transloadit servers to

@uppy/transloadit. As was the case with Socket.io, we fall back to HTTP

polling every 2 seconds if SSE connection fails for some reason.

Socket.io is still around in @uppy/transloadit, but will be removed soon, once

SSE proves itself in production.

Bug fixes

- Reset

tuskey in the file on error, so retried files are re-uploaded. - Clean up event listener to prevent cancelled assemblies.

- Make sure

fieldsis not nullish when there are no files in Assembly.

Companion

Refresh tokens

We’ve implemented refresh tokens for Dropbox and Google Drive to solve issued where tokens would expire in the middle of a long upload and then the upload would fail.

Here’s how it’s implemented in Companion:

- We changed the logic when receiving a 401 from Companion, Uppy will now call a

new

/:provider/refresh-tokenendpoint which will give uppy a new access token. refresh_tokenis now stored inside uppy auth token along withaccess_token(encrypted JWT) for providers that give a refresh token (before onlyaccess_tokenwas stored, now we store both as aJSON.stringify’d document).

Bug fixes and improvements

- Fixed a bug with non-ASCII metadata crashed Companion. The AWS SDK doesn’t encode metadata by itself, so now we do it in Companion.

- Switched from aws-sdk v2 to @aws-sdk/* v3

- Upgraded Grant dependency

- We now send expire info for non-multipart uploads and added connection keep-alive to Dropbox to improve connection stability

- Merged Provider and SearchProvider internal remote source classes.

- Increased max limits for remote file list operations and fixed part listing in S3

Url

We’ve upgraded the Url plugin to use filename from Content-Disposition

header of the file you are importing, instead of relying on the url (but kept

the latter as a fallback) (#4489). So now your files have proper names instead

of noname.

Dashboard and Status Bar

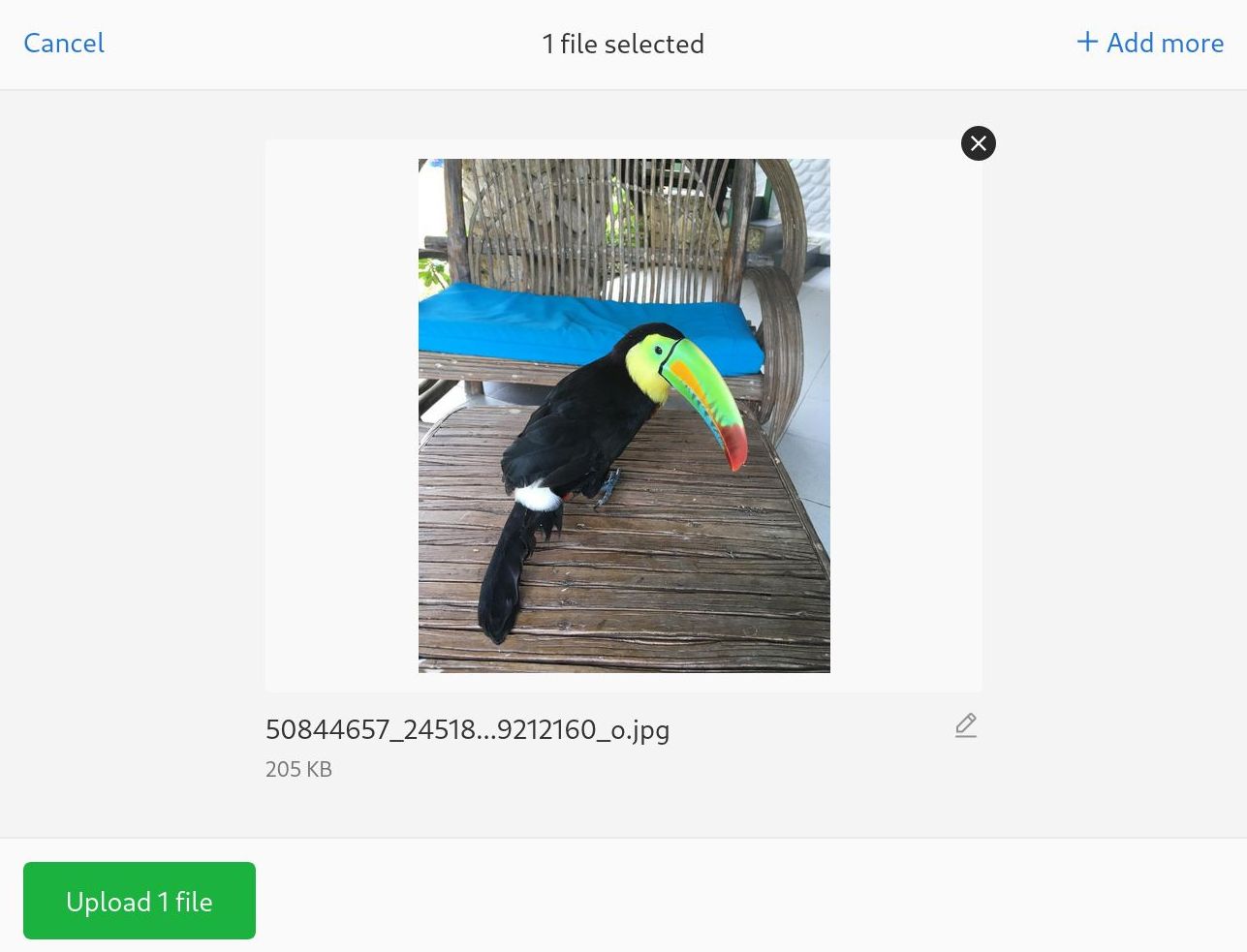

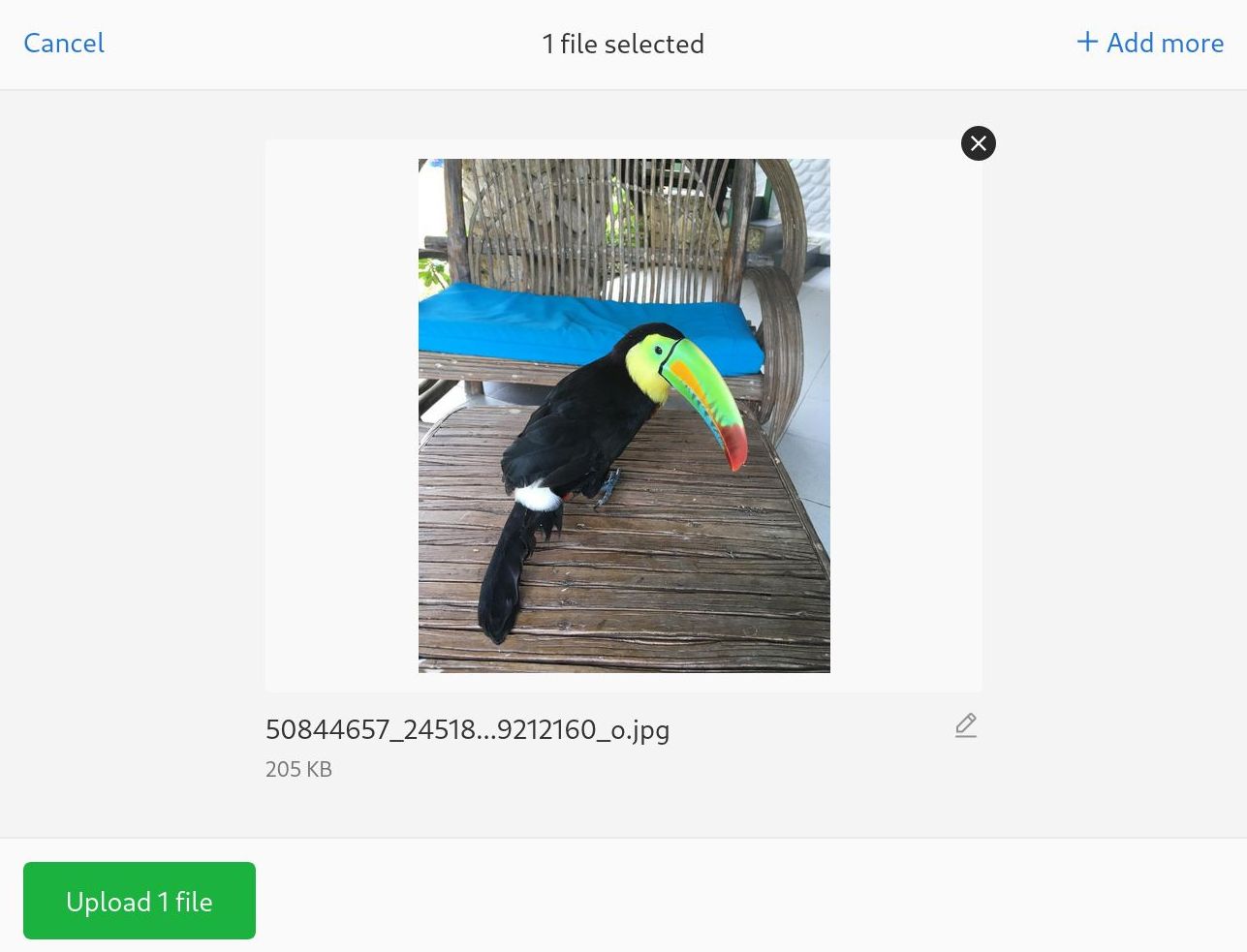

Single File Mode has been improved to adapt to height of less than 400px — the

UI turns back to grid in this case. We are also not using fit: cover anymore

to avoid cropping important parts of the image:

We’ve also added an option to disable the Single File mode, as it can be

unwanted in certain use cases:

singleFileFullScreen.

If you wrapped Uppy Dashboard in a <form> element, it could be accidentally

submitted when a user pressed Enter to save meta fields or entering urls. Now

we’ve

added a form="" attribute, connected to an empty <form>

in document root to prevent the outer form from being submitted.

A bug has been fixed that allowed clicking on buttons and links in Dashboard disabled mode.

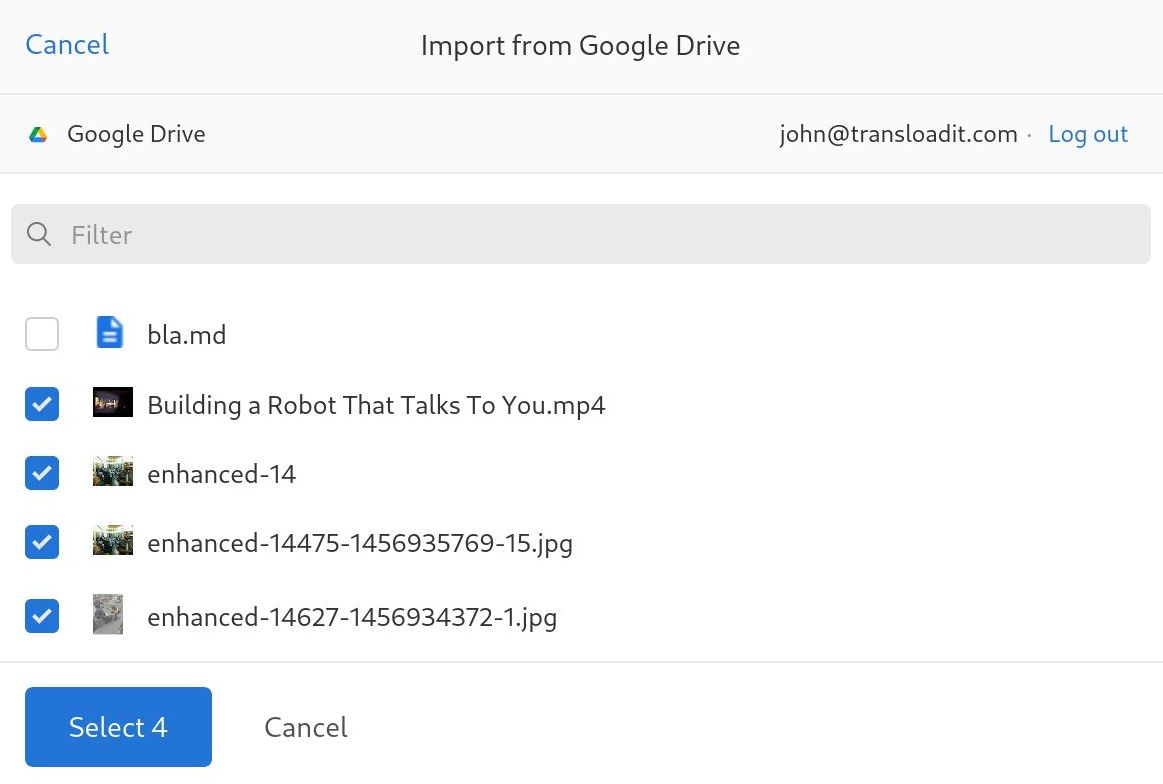

Range selection of remote files has been fixed, you can now shift+click to

select multiple files again. We’ve added the VirtualList component which is

already used in Uppy “selected files” screen to remote file lists. So now

scrolling through 10k+ files is breeze 🌬️

Status Bar

- Fixed ETA when Uppy recovers its state.

- Remove throttled component to fix a bug where state would become shared between multiple status bars on a page.

Locales

We’ve added support for Hindi and Mexican Spanish. French, Spanish and Chinese locales have been improved.

Uploaders

- Fixed an issue where sockets were opened right away, ignoring the

RateLimitedQueue, which led to bugs in all plugins that handle remote uploads. Additionally, when file is removed (or all are canceled), we callcontroller.aborton queued requests. - Fixed a bug with sockets being closed, while the upload was still in progress.

- In XHR Upload we’ve added support for arrays in metadata and an

'upload-stalled'event.

Miscellaneous

@uppy/golden-retrieverhas been refactored refactor to modernize the codebase.- We’ve switched more non-critical errors to warnings.

- Improved fallbacks for the drag & drop API.

- The React Native example has been modernized and updated.

uppy.resetProgress()in Core has been fixed.

We hope you’ve enjoyed this update posts and all the fixes and features we’ve worked on! As always, please see the full changelog on GitHub: 3.4.0 — 3.13.0.